This might seem like a no-brainer, but many tests used for selection/promotion have no validity. In lay terms, the scores predict absolutely nothing! Not only do these tests fail their basic purpose, but they invite legal challenges, favor the inept, and eliminate the qualified. That’s why validation is so important. We all know personal opinions and unstructured interviews are lousy tests. Tests scores (including interviews) are supposed to accurately predict job performance.

This might seem like a no-brainer, but many tests used for selection/promotion have no validity. In lay terms, the scores predict absolutely nothing! Not only do these tests fail their basic purpose, but they invite legal challenges, favor the inept, and eliminate the qualified. That’s why validation is so important. We all know personal opinions and unstructured interviews are lousy tests. Tests scores (including interviews) are supposed to accurately predict job performance.

Tell Me About Yourself

Asking someone to “Tell me about yourself” does not sound like a test question. But, what would you call asking a question, evaluating the answer, and making a decision? It makes no difference if it’s written on paper or verbal. If you make a decision based on a candidate’s answer, it’s a test. Now, how about this kind of test question:

“Give me an example of when you solved a difficult problem. Tell me what the problem was, what you did, and the result.”

Better, yes? But only if the interviewer knows that problem solving is important to the job; the kind of problem solving required; the difference between good and bad problem solving; can pry details from the candidate; and, uses a standardized scoring sheet. Why get all nitpicky? Structure is the best way an interview question can be a good predictor of job performance.

You may think unstructured interviews are the bread-and-butter sandwich of recruiting, but look between the slices: usually you’ll find mold and rancid butter. Interview questions need validation just as much as formal tests.

You cannot trust any selection tool that is not valid! Consider Merriam-Webster’s definition of valid: having authority, relevant and meaningful, appropriate to the objective, supported by truth, basis for flawless reasoning, evidence, justifiable. Now, isn’t that something you want?

False Sense of Security

Once upon a time I worked for a large consulting company. It was filled with administrative assistants who could not spell and used bad grammar. After listening to client complaints, I went to the internal HR department and asked if they gave AA applicants a spelling and grammar test. “Yes,” they answered, “We use one developed by the owner.” A quick examination showed the test looked OK (i.e., was face valid) so I asked if I could see the scores from the last 100 hires. Guess what? Passing rates averaged about 95%!

High AA scores might have given HR a warm and fuzzy feeling, but it was just another case of organizational incontinence. I considered giving them a box of departmental-sized Depends, but I think they would have missed the point. Their test did not test anything! It was not valid. While worker bees labored to present a professional image to big-buck clients, incompetent AAs were misspelling words and using atrocious grammar. I’m sure the president would have responded if he had known, but HR was not going to rock the boat if their life depended on it.

Self-developed tests may seem like a good idea, but they are usually inaccurate, invalid, or poorly maintained. Bogus tests harm the organization because they give a false sense of security, while actually doing nothing to improve quality of hire. This is especially true when managers get frustrated and decide unilaterally to make up their own test. If it’s important enough to test, it is important enough to validate. Otherwise, forget it. Even high-profile assessment organizations make foolish decisions.

From the Frying Pan Into the Fire

Math and reading are becoming problematic. I’ve heard from dozens of organizations about employees who cannot read, calculate, or write. This is an issue when becoming automated, adopting computer-driven equipment, or encountering frequent or steep learning curves. In response, unknowing people think grabbing a test off the shelf will solve their problems. I’ve even seen some who used reading tests developed for placing students in the right English class.

Testing studies show a three-bears effect. That is, human KSAs come in sizes: too little, too much, and just right for the job. For example, we know intelligent people tend to perform better than unintelligent ones; and, intelligent people tend to score higher on abstract verbal and numerical tests. But now life gets challenging …

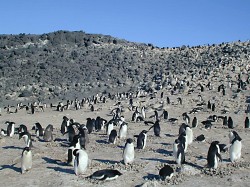

It’s a fact of life (at the group level) that intelligence test scores cluster into different curves depending on demographics. There are plenty of theories why, but we’ll conveniently ignore them. Let’s just say we have five demographic groups: Pandas, Penguins, Puppies, Kittens, and Bunnies. Pandas score an average of 85, with 2/3 falling between 70 and 100; Penguins average 93 with 2/3 between 77 and 107; Puppies average 100 with 2/3 between 85 to 115; Kittens average 107 with 2/3 between 100 to 122; and Bunnies average 114 with 2/3 between 107 to 129.

Demographics membership does not force someone to be smart or dull. Individual Pandas can still score substantially higher than individual Bunnies and individual Bunnies can score lower than an individual Penguin. There will just not be as many high-scoring Pandas and Penguins at the group level than Kittens and Bunnies. Now this next part is important!

We don’t need to be rocket scientists to know that low scores lead to mistakes, bad products, and safety violations, while high scores usually lead to boredom and turnover. Balancing demographic differences with our need for “just-right” intelligence, how do we establish and defend cutoff scores?

No organization I know is forced to hire unqualified people. But, the EEOC and OFCCP expect you to show there is a business need and job requirement. Oh yes, and it is incumbent on employers to give new employees “reasonable” time to learn the necessary skills. If you eliminate new employees based on something they could learn in a reasonable time, you better be able to explain why.

A well-done validation study keeps the input funnel filled; employs only fully qualified employees; keeps training times reasonable; minimizes adverse impact at the group level; and maintains both business necessity and job requirements.

In the next part, I’ll discuss a few differences between validation and litigation.